...

| Field name | Content | Examples |

|---|---|---|

| Scheduler | Type of scheduling to use. Default is to use the dynamic scheduler, so scheduling units are picked up automatically, but it is possible to specify fixed time scheduling. | Dynamic |

| time at | Run observations at this specific time. Only use this if really required, e.g., the target is also observed with another telescope. Specifying this option circumvents priority scheduling and should be used with care. | |

| time after | Minimum start time of this scheduling unit. Default: start of cycle | 2021-06-01 00:00:00 |

| time before | Maximum end time of this scheduling unit. Default: end of cycle | 2021-11-30 23:59:59 |

| time between | Only schedule within the time windows specified here. More time-windows can be specified by pressing the + button below. This can be used to distinguish observations that should run monthly be specifying a few days where these observations could run. | From: 2021-07-08 00:00:00 Until: 2021-07-10 23:00:00 |

| time not between | Do not schedule within the time windows specified here. More time-windows can be specified by pressing the + button below. | From: 2021-07-08 00:00:00 Until: 2021-07-10 23:00:00 |

| Daily | require_day : Day time observations. Run when the sun is higher than 10 degrees above the horizon at the Superterp. require_night : Night time observations. Run when the sun is lower than 10 degrees above the horizon at the Superterp. avoid_twilight : Avoid sunrise and sunset. Run when the sun is higher than 10 degrees above the horizon at the Superterp, or lower than 10 degress below the horizon. | |

| transit_offset | Offset in (UTC) seconds from transit for all target beams in the observation. Alternatively, use you can specify the reference pointing as the reference for transit. When the observation is split into shorted chunks to be observed at different LST ranges. Please take into account a one minute gap between subsequent observations. | from -7200 to 7200 from -7200 to -3600 from 3600 to 7200 |

| min_distance | Minimum distance to the Sun, Moon and Jupiter (latter mostly relevant below 30 MHz) in degrees (backend uses radians). Current default 30, or 28.64 degrees | Sun: 30 Moon: 30 Jupiter: 30 (< 40 MHz ) else 15 |

| min_elevation.target | Minimum elevation for all SAPs in the target observations | |

| min_elevation.calibrator | Minimum elevation for the SAP of all calibrator observations | |

| Reference pointing | If true, will be used for scheduling calculations taking into account the transit_offset Note: If used, the reference pointing direction should be identical to the tile beam pointing direction (for HBA). | enabled=false enabled=true |

...

| Task Parameter | Present in these strategies | Description | Examples |

|---|---|---|---|

| Duration | All except BF FE - Ionospheric Scintillation and FE RT Test | Observation duration in hour:min:sec. For HBA strategies there are additional duration fields for the calibrators and/or additional targets/beam pointing. Similarly some modes have Target Duration which is given in seconds. | 00:02:00 (hour:min:sec) 28800 (sec) |

| Observation Description | All except BF FE - Ionospheric Scintillation and FE RT Test | Usually target (and/or calibrator) name | OOO.O _Target_name_ FRB YYYYMMDDA Paaa+01 & Paaa+02 |

| Pipeline Description | All except BF FE - Ionospheric Scintillation and FE RT Test | Usually target pipeline (and/or calibrator pipeline) name | oOOO.O _Target_name_ FRB YYYYMMDDA/pulp Paaa+01/TP |

| Target Pointing | All except BF FE - Ionospheric Scintillation and FE RT Test | Define pointing to target(s and/or calibrator(s)) given in Angle 1 (RA), Angle 2 (DEC), Reference frame (J2000) and target name | Angle 1: 02h31m49.09s Angle 2: 89d15m50.8s Reference frame: J2000 Target: _Target_name_ |

| Digifil options | BF CV FRB | Relevant options for siggle-pulse searches | DM: 0.0001 Nr of Frequency Channels: 320 Coherent Dedispersion: true Integration time: 4 |

| Raw output | BF CV FRB and BF CV Timing Scintillation | Whether to include the raw data in each output data product | false true |

| Sub-band per file | BF CV FRB and BF CV Timing Scintillation | The maximum number of sub-bands to write in each output data product. | 20 |

Optimize period and DM | BF CV Timing Scintillation and BF Pulsar Timing | false true | |

Sub-integration time | BF CV Timing Scintillation and BF Pulsar Timing | 10 | |

Frequency channel per file | BF CV Timing Scintillation | Number of frequency channels per part (multiple of subbands per part). | 120 |

| Duration FE (1..4) | BF FE - Ionospheric Scintillation, FE RT Test and Solar Campaign | Duration of (one of the) the fly's eye (FE) observation in seconds. Needs to fit within the overall SU duration. | 300 (sec) |

| Description FE (1..4) | Solar Campaign | IPS FE1 | |

| Pointing FE (1..4) | Solar Campaign | Define FE pointing given in Angle 1 (RA), Angle 2 (DEC), Reference frame (J2000) and IPS name | Angle 1: 02h31m49.09s Angle 2: 89d15m50.8s Reference frame: J2000 Target: _Target_name_IPS |

| Frequency | IM HBA 1 beam and IM LBA 1 beam | Frequency range of the observation, i.e. bandwidth covered. | LBA: 21.4-69.0 HBA: 120.2-126.7,126.9-131.9,132.1-135.3 etc. |

| Sub-bands | IM HBA 1 beam and IM LBA 1 beam | Sub-band numbers specification (alternative to frequency). For Range enter Start and End separated by 2 dots. Multiple ranges can be separated by comma. Minimum should be 0 and maximum should be 511. For example 11..20, 30..50 | false true HBA: 104..136,138..163,165..180,182..184,187..209,212..213, etc. |

| Run adder | IM HBA 1 beam, IM LBA 1 beam, IM HBA LoTSS 2 beam and IM LBA LoDSS 5 beam | Do/Don't create plots from the QA file from the observation | false true |

| Tile Beam | IM HBA LoTSS 2 beam | HBA Only, insert pointing of the tile beam given in Angle 1 (RA), Angle 2 (DEC), Reference frame (J2000) and target name. Should be between (average of) the SAP pointings. | Angle 1: 02h31m49.09s Angle 2: 89d15m50.8s Reference frame: J2000 Target: Paaa+01Paaa+02REF |

| Filter | IM LBA 1 beam | Bandpass filter applied | LBA_10_90 HBA_110_190 |

| Antenna set | IM LBA 1 beam | Select the antenna mode to observe with | LBA_SPARSE_EVEN HBA_DUAL_INNER |

Time averaging steps | IM LBA 1 beam, IM LBA 1 beam and IM LBA Survey 3 beam | Factor used to average the data in time | 2 |

Freq averaging steps | IM LBA 1 beam, IM LBA 1 beam and IM LBA Survey 3 beam | Factor used to average the data in frequency | 3 |

| Demix pipeline | IM LBA 1 beam and IM LBA Survey 3 beam. | Given in several drop-down menus per target/calibrator where the user can select what sources to demix. Note that the time step and frequency step in this menu have to be multiples of the previously defined averaging steps. | Sources: CasA, CygA, HerA, HydraA, TauA, VirA Time step: 8 Ignore Target: false Frequency step: 64 |

...

Within a Ingest Task the user has to make sure that the predecessor tasks are correct before the ingest and cleanup happens.

Bulk Scheduling Units Specification (Scheduling Sets)

...

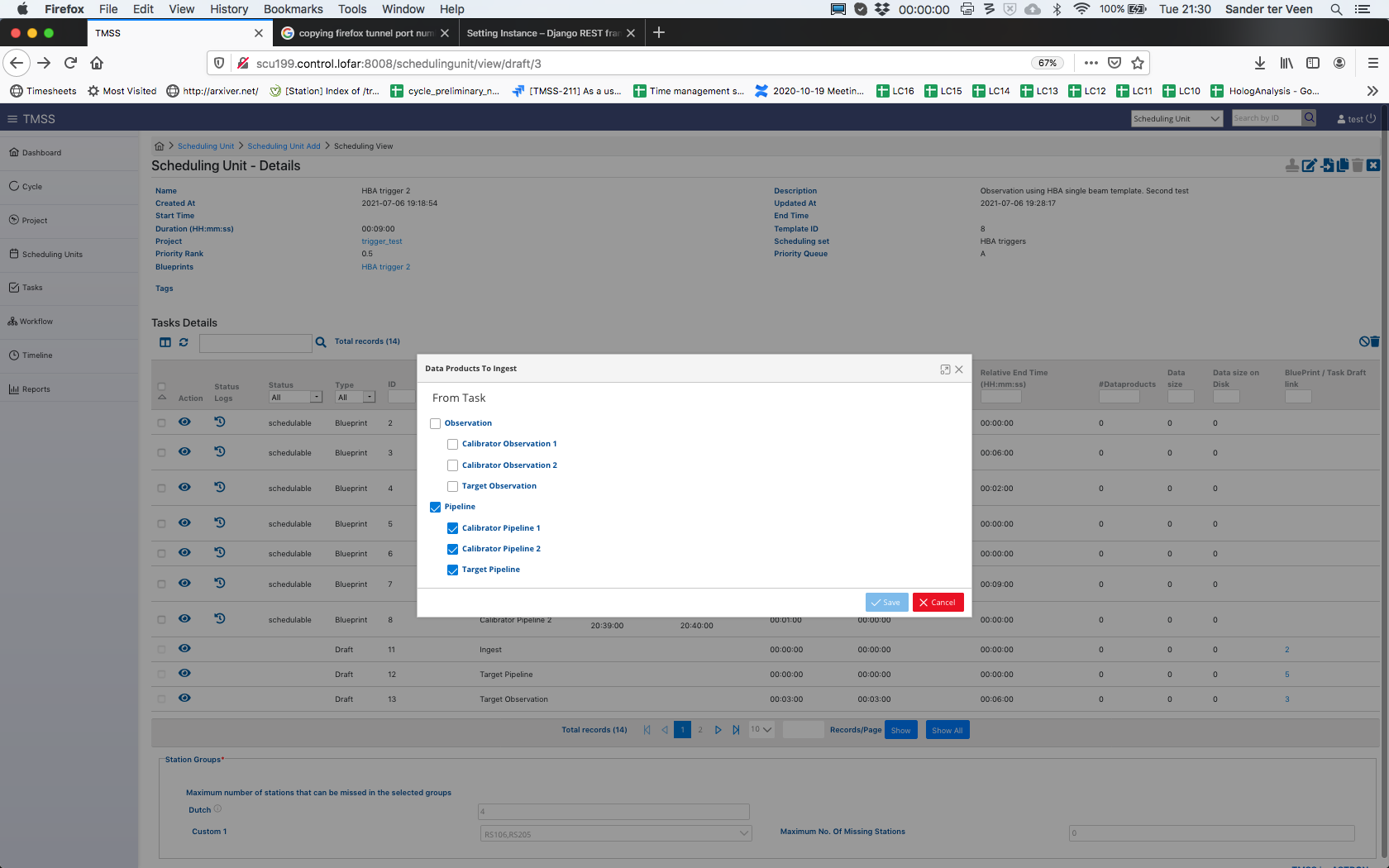

Changing dataproducts to ingest

The dataproducts to ingest are part of the observing strategy. For most observing strategies, we only ingest the pipeline data. To change this, and ingest for example also the raw data, navigate to the scheduling unit page and press the ingest button . This gives a pop-up where you can select which data products to ingest.

This can be done both for drafts and blueprints.

Bulk Scheduling Units Specification (Scheduling Sets)

During the creation of a SU, the user can add the SU to a Scheduling Set which can be used as an easy filter in the SU menu to search for the appropriate tasks, but if for example the one pointing the SU has been created for is part of many different SUs in a project, then it has to be sorted into the correct Scheduling Set belonging to that one project. A user can also create multiple SUs at once that are then belonging to such a scheduling set by using the button. This introduces the following menu to the user:

...

It is possible that an observing session failsresults in bad data. This can be either due to not matching the requirements specified in the tasks or due to external circumstances (e.g. observing conditions poor). This It means that the data can't be accepted, so the corresponding flag needs to be set. It requires the FoP to:

- set

- Set the corresponding SU data acceptance flag to

Status colour Red title

failed- Set the corresponding SU data acceptance flag to

(see procedure below)false

evaluate - . Warning: This should be done using the QA workflow interface, but a manual procedure using the TMSS API is also available.

- Evaluate if repeating the failed observing session described by the failed SU is within the policy for the project (i.e. check priority and if the failed session was already a repetition)

if - . Warning: This should be done using the QA workflow interface, but a manual procedure using the TMSS API is also available.

- If possible, add more tasks to the SU; to this aim new tasks can be added in a SU by using in the "Scheduling Unit - Details" menu and then clicking on the 'paper-and-pencil' icon next to the "Task Details" section.

if - If possible repeat the process of specification and submission by generating a copy of the SU draft; to this aim copy the failed blueprint of the SU to a (renamed) draft SU and blueprint it. In this case, make sure that the new SU does not retain any fixed timeslot from the failed copy. Also, make sure that any archived data related to the failed SU to be removed from the LTA via opening a ticket in the Helpdesk (project users) or directly cleaning up the archive (for SDCO operators).

- If possible repeat the process of specification and submission by generating a copy of the SU draft; to this aim copy the failed blueprint of the SU to a (renamed) draft SU and blueprint it. In this case, make sure that the new SU does not retain any fixed timeslot from the failed copy. Also, make sure that any archived data related to the failed SU to be removed from the LTA via opening a ticket in the Helpdesk (project users) or directly cleaning up the archive (for SDCO operators).

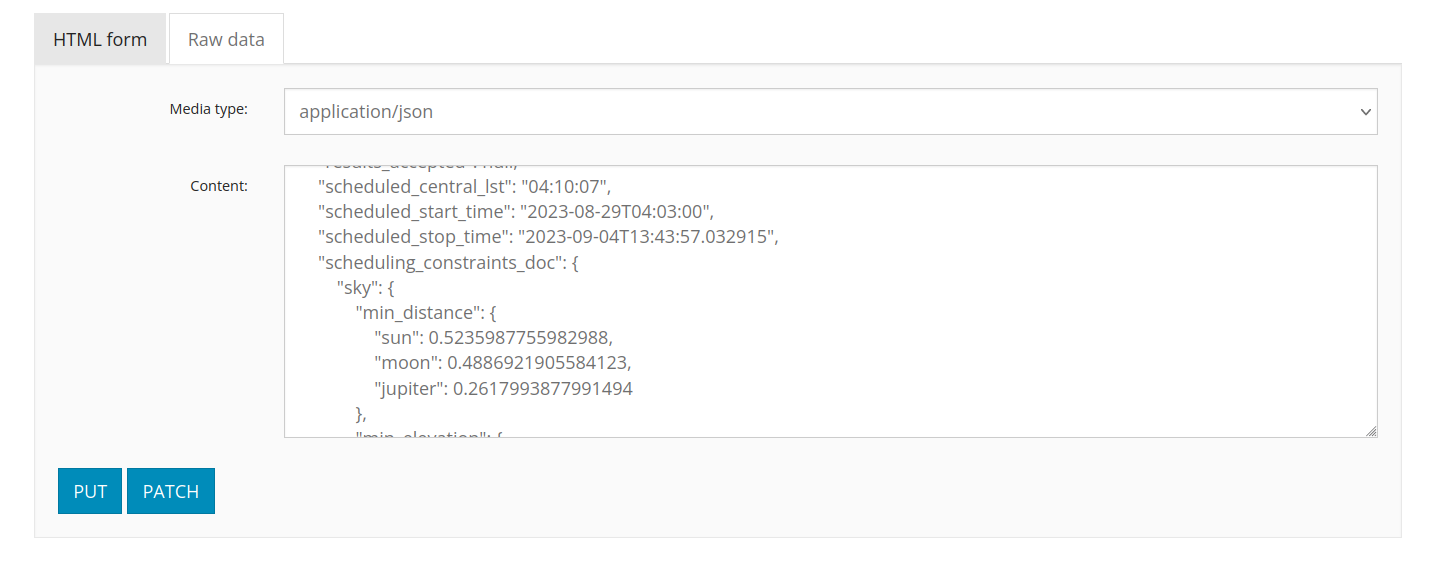

In order to set a SU data explicitly to

the following procedure can be used:Status colour Red title failedfalse

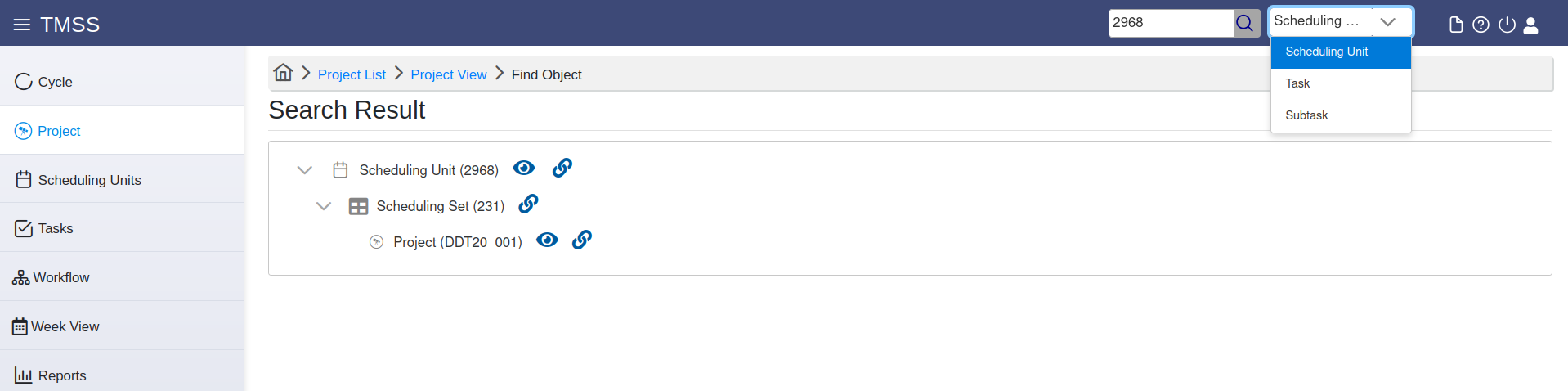

- At the top of the TMSS window, in the search input field we enter the ID of the SU blueprint, while in the drop-down menu next to it we select to search for SUs (this is the default entry, but we can also search for Tasks and Subtasks)

- We then click on the 'link' icon next to the 'Scheduling Unit' entry (the first row in the search results listing).

- The SU API view opens in another browser tab. At the bottom of the page, we click on the 'Raw data' tab in the input form section and for the 'results_accepted" key in the 'Content' text area we change its value from 'null' to 'false'.

- To confirm the change, we click the 'Patch' button at the bottom of the page.

- Now, the SU data acceptance flag will be set to

Status colour Red title

failed

, and any related (also pinned) data to the SU will be deleted, or have to be deleted manually if thefalse

SU was set to failed - prior steps were performed before the associated cleanup task has run.

- If data were archived, an LTA cleanup action can be requested by project users via opening a ticket in the Helpdesk or directly performed by SDCO operators.

This concludes the Observation Specifications description. However, this is not where the responsibilities of the FoP end, as after the observation was successful the FoP has to do the Reporting.

Reporting

Currently the Reporting tab in TMSS is incomplete and reporting on a scheduling unit level is not yet possible. We will be using the Jira system for handling observation reports. After the observations and subsequent processing are completed, the PI will be notified via the Jira ticket associated to the project and the requested data products will be made available through the Long Term Archive (LTA) as soon as possible.

The Radio Observatory will not have the opportunity to check all data manually before it is exported to the LTA, although some automatic diagnostics are generated for system monitoring, and offline technical monitoring will be carried out regularly. The FoP or the expert user in charge of project support will send to the project PI a notification after each observation with detailed information on where to find the relevant plots. The project team is encouraged to analyze the data, at least preliminarily, soon after the observations, and to get in touch through the ASTRON helpdesk about any possible problems. Observations that turn out to have an issue that makes them unusable may be considered for only one re-run, as soon as the observing schedule allows.

Additional information about the observing and processing policies adopted by ASTRON during the LOFAR Cycles is available at the relevant pagescomplete the QA workflow together with the TO and the PI.

QA workflow

Accessing the workflow view

There are multiple ways to access the workflow administration space for a given SU:

- If looking for a specific workflow, it is possible to find it by:

- Using the search field in the upper bar on every TMSS page we can search for SUs using their blueprint IDs. The drop down menu next to the search box needs to be set to the (default) value of 'Scheduling Unit' (it can also be used to search for Task and Sub-task IDs).

Then, click on the 'eye' icon next to the scheduling unit, and on the SU details screen which will open, click on the 'workflow' icon (; second from the left in the row of icons on the top right of the page, across the page title).

- Going to the scheduling units menu page, selecting Blueprint from the top drop-down menu, then enter the SU blueprint id in the corresponding column and press "Enter" to search. Then, we click on the Eye icon on the left to see the SU blueprint. Once on the details page, we click on the View Workflow icon (second from the left at the top of the page). The workflow page for this SU opens.

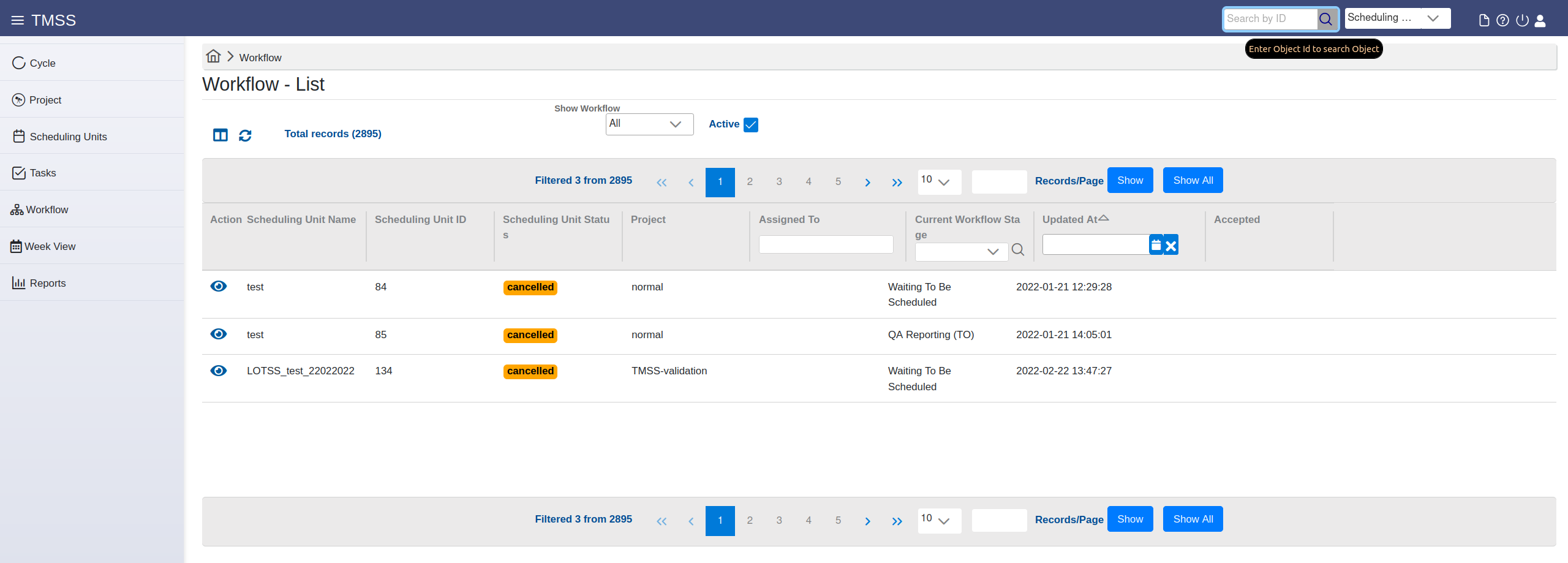

- If one wants to access workflows that require actions, we can use the workflow menu page accessible by clicking the corresponding menu item on the TMSS main menu on the left of the page, a listing of workflows will appear:

We can see the SUs which workflows are in a state we select from the drop-down above. The checkbox indicates that we filter by default on all active ones, others disappear. To find a particular SU (workflow), the user should make use of the drop-down menu options or use the column filtering options. Workflows are only associated with blueprints, so the IDs referred to here are SU blueprint , not draft IDs. Clicking on the 'eye' ison in the first column will open the workflow page for the corresponding row.

Managing the workflows

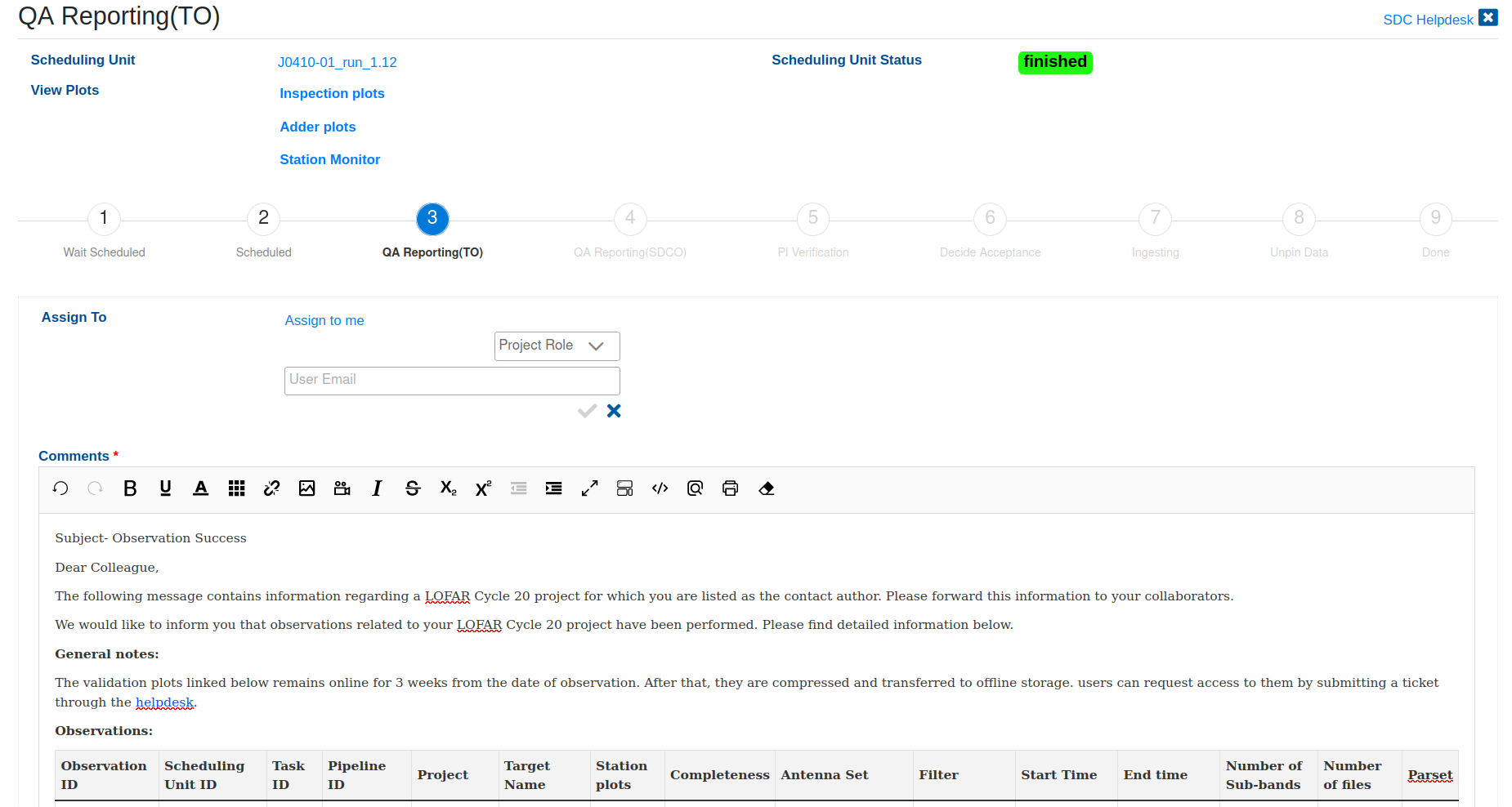

The management of the workflows is done through the dedicated workflow view page:

At its top there are links to the SU details page, as well as to the various inspection plots related to the observing run. These can be used by the TO/SDCO/PI as an aid when performing the quality assessment (QA). Below, a graphical representation of the workflow is given consisting of 9 steps, with the number highlighted in blue indicating the current level of progress. Their associated overviews are accessible depending on the role the user has.

If the workflow is not assigned to the current user, s/he can use the "assign to me" to perform the assignment, or choose a user to assign the QA to using an e-mail address, or assign by role, choosing from the roles provided in the "Project Role" drop-down menu.

The QA starts at level 3 where the users with telescope operator (TO) role can enter reports or comments related to the quality of the data and the performance of the system in the provided text area. It is usually pre-populated by a standard observing report.

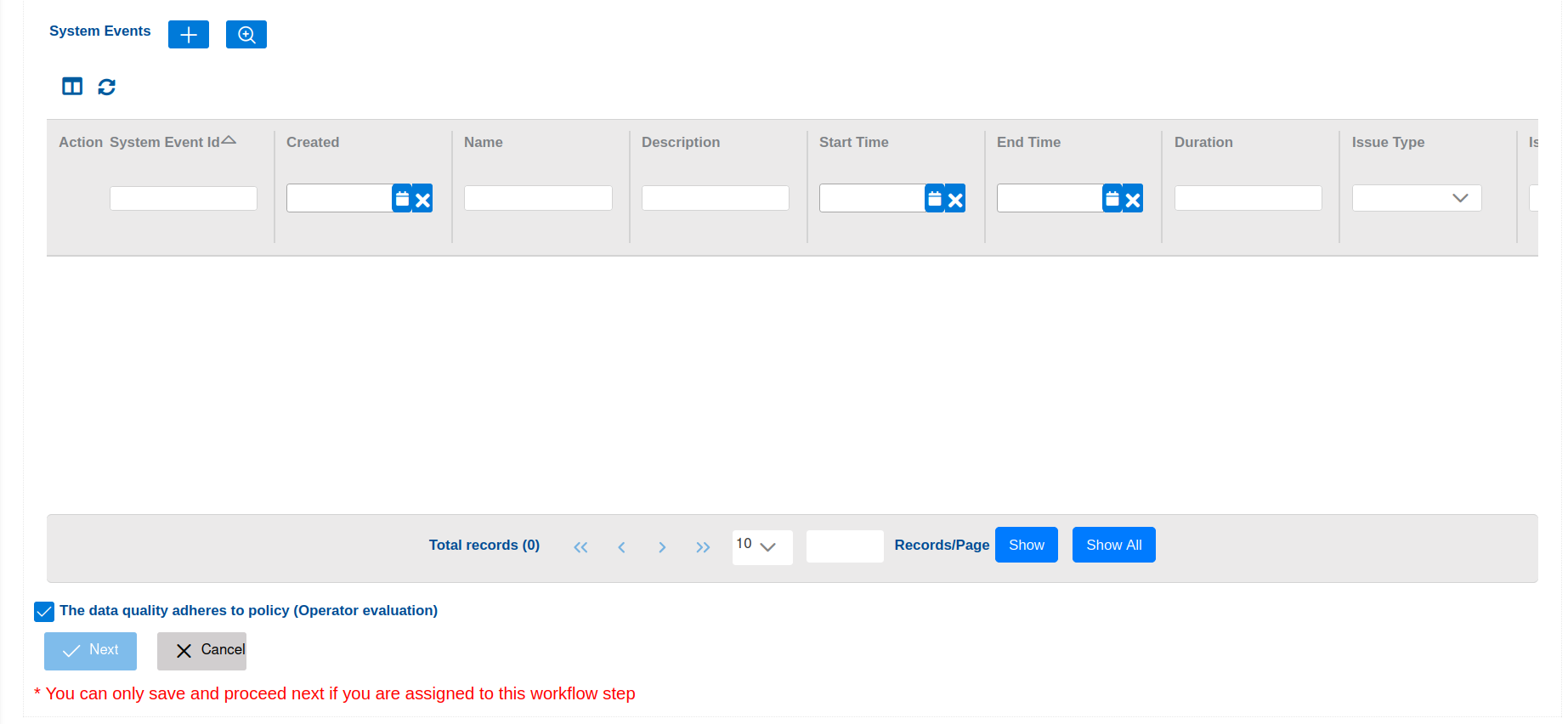

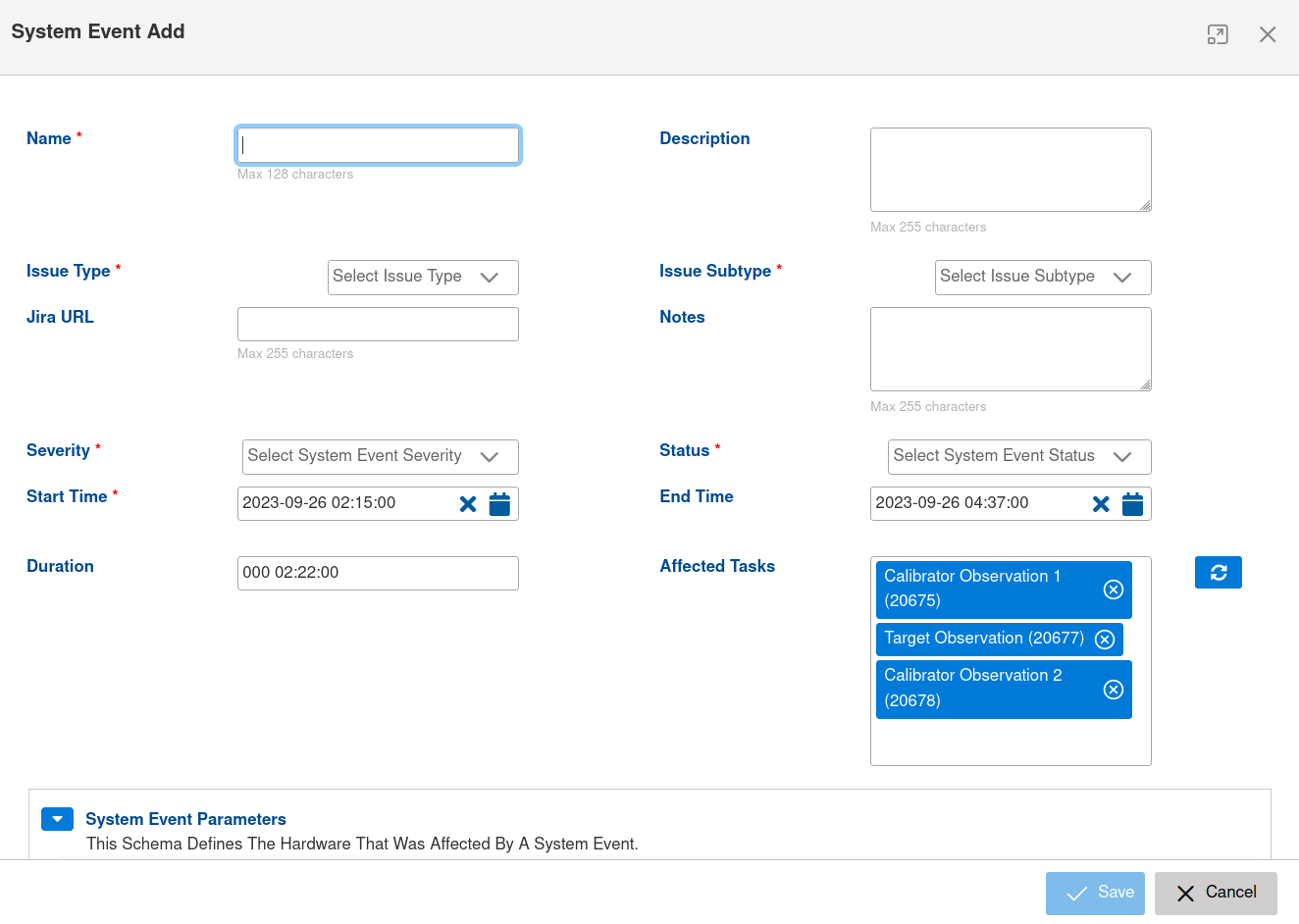

At the bottom of the page, there is a list where the TO can enter system events relevant for the QA by adding an new one (using the "+" button) or by searching for existing ones and adding them to the list (using the "Search" button next to the "+" one). The new event page opens in a pop-up where the user can enter the relevant event details:

The TO can filter the listed system events using the provided column header fields in the list.

Finally, if the TO approves that the data adheres to policy, s/he can click the "Next" button to sent the QA for the following round of assessment by the SDCO/PI.

Each of the users / roles in the workflow steps can approve or disapprove of the data quality and enter an appropriate comment. The approval state is reflected by the state of a checkbox at the bottom of the page after all of the user roles have decided on the QA state. Then, the QA assessment is finished, and the PI has an overview of the procedure. The data is unpinned / deleted or archived depending on the QA outcome. The Data Accepted columnin the blueprint listing on the TMSS project view page will indicate whether data for a given executed SU blueprint is accepted or not (false).

Please note that SDCO is still using the Jira system for handling observation reports and project users will be notified to complete the QA workflow via the corresponding ticket.

The Radio Observatory will not have the opportunity to check all data manually before it is exported to the LTA, although some automatic diagnostics are generated for system monitoring, and offline technical monitoring will be carried out regularly. The FoP or the expert user in charge of project support will send to the project PI a notification after each observation with detailed information on where to find the relevant plots. The project team is encouraged to analyze the data, at least preliminarily, soon after the observations, and to get in touch through the ASTRON helpdesk about any possible problems. Observations that turn out to have an issue that makes them unusable may be considered for only one re-run, as soon as the observing schedule allows.

Additional information about the observing and processing policies adopted by ASTRON during the LOFAR Cycles is available at the relevant pages.

Contact author instructions

As a contact author, you will be notified by e-mail or by JIRA ticket if new reports are ready for inspection. Please make sure you can receive e-mail from ASTRON domains. You can also see all assigned tasks to you by going to the workflow page and filtering on "assigned to me".

You are asked to confirm you will accept the data. Please write your confirmation or rejection in the box. In case there are issues that you want to raise for which you consider not accepting the data, then please add in the comment box and untick the "PI accepts box". For the data policy, please consult the relevant information page. Your friend-of-project will contact you in JIRA for the final decision on what to do with the data. After this period you still have 4 weeks for a detailed data inspection. In case there are issues, please report them in JIRA. Please note that in case you do not accept the data, this data cannot be used and should be removed from your systems. We will also remove them from the LTA. A repetition will be scheduled instead if your observation is eligible for one, see again the policies.